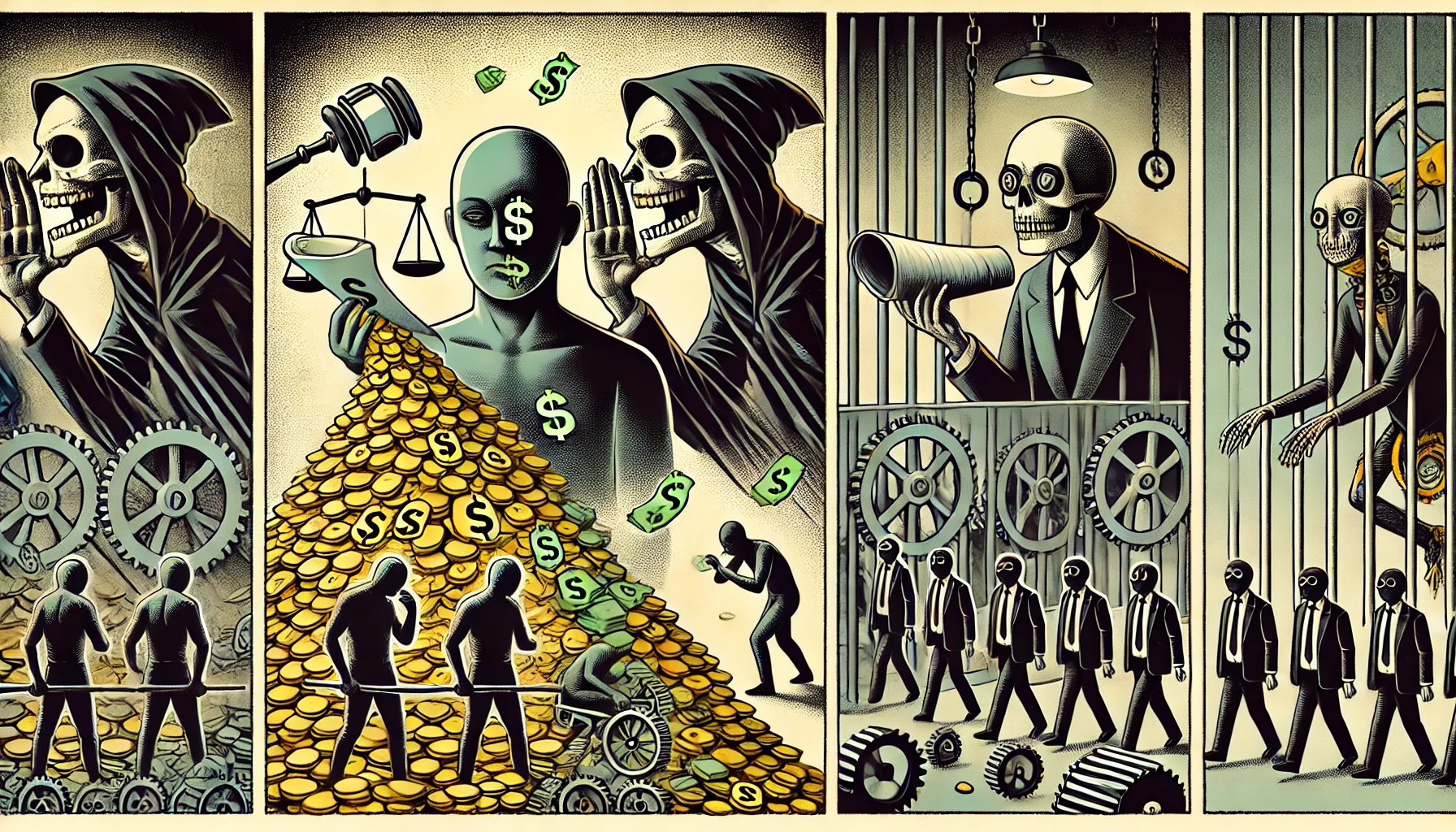

The Justice Department’s Selective Incompetence

Department of Justice’s recent failure to redact the names of Jeffrey Epstein’s victims is not a routine clerical lapse. It…

From Chat Bots to Sovereignty: The Agentic Era and the New Geopolitical Order

This paper argues that the next decade of global competition will not be defined merely by who possesses the most…

Original Music by M1STYK | Genre Blending and Innovation

Step 1: Seeing the evil for what it is, an over inflated ego and greedy spirit within. We need to…

The AI Misalignment Dilemna & the Need For Global Regulations

The AI Misalignment Dilemma & the Need for Global Regulations Based on “Current Cases of AI Misalignment and Their Implications…

ChatGPT Prompted Stand-Up Comedian (Hilarious!)

Popular Specialized ChatGPT “Stand-Up Comedian” I had noticed this model while perusing the Sider AI catalogue before and made a…

In anticipation of my next post where I’ll be covering the urgent matter of AI Safety and Ethics..

I leave you with this handy link: https://drive.proton.me/urls/X0ND2YW9JR#cqubPclwmFb8 It takes you to a PDF written by Leonard Dung. A well-respected…

The Uni-Party Setup:

Introduction The media has sold America’s political story as a clash of red vs. blue, but the scoreboard never changes:…

Call out the 5th Column (Remastered)

Check out the latest activist anthem and powerhouse jam by MiStyk. You’ll be sure to order the next round of…

The Two-Party System and the Convergence of Power

A Historical Analysis of Political Co-operation Introduction If you’ve spent any time on X (formerly Twitter) lately, then you’ve probably…